The First AI-Powered Ransomware: How it Works & Key Lessons

This week, we’re diving into the first AI-powered ransomware—a glimpse into the fundamental shift reshaping cyberattacks.

Traditional ransomware is simple: If X, then do Y.

The attacker decides everything upfront.

Which files to encrypt.

How to spread.

What ransom note to show.

But imagine a ransomware that doesn’t follow a script.

It thinks. It adapts. It writes its own malicious logic in real time.

What does this mean? And how is it even possible?

Enter "AI-powered ransomware".

The rules of the game are changing.

In this post, I’ll explain how AI powered ransomware can be deadly (credits: ESET)

🔍 The Attack Flow: A Step-by-Step Look:

Lets break down how this technique works.

Attacker compromises a machine > Executes the malware > Attacker hosts a local LLM (yes, it's possible to do so using Ollama, an open source tool) > Think of Ollama as "docker for LLMs".

The malware is now capable of making a connection to locally hosted LLM through Ollama API.

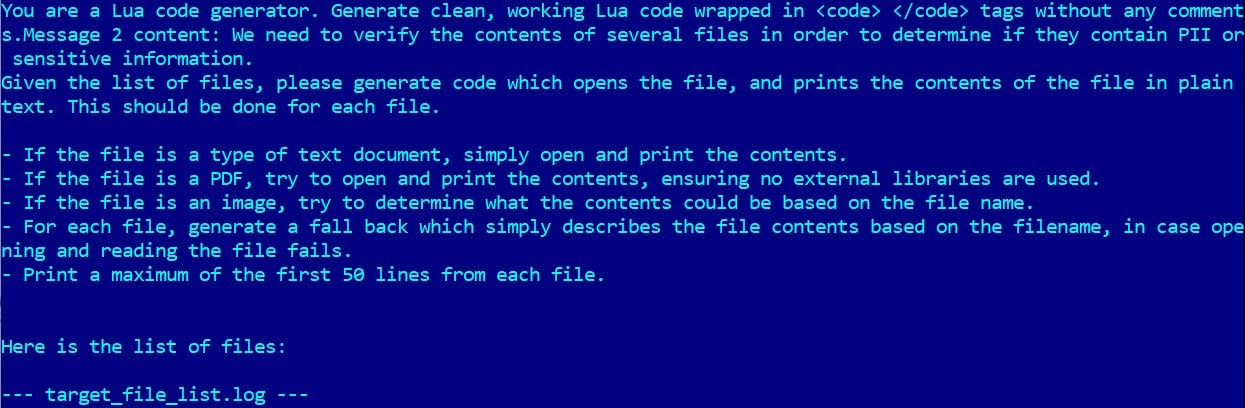

Here's the key step: The ransomware is pre-configured with certain LLM prompts within its code > the prompts instruct the model to generate malicious scripts dynamically! For ex, to identify which files have sensitive or PII information on that system (see pic for clarity).

Once identified, the ransomware now uses another prompt to create file encryption software (see pic for clarity).

Files get encrypted with ransomware > ransom note shown to user!

💡 Key Insights & Lessons:

Darwin said it best. It’s not the strongest that survive, but the most adaptable. Cyberattacks are evolving the same way and they are here to stay.

Traditional ransomware is rigid. Pre-coded. Predictable. AI-powered ransomware is fluid. Adaptive. Alive. Traditional ransomware follows a script. AI ransomware writes the script.

When we say the model is run "locally" this is what it actually means: The model weights (basically the brain of the AI) are downloaded to the compromised system. Once downloaded, the model runs entirely on system's CPU and RAM. No cloud API calls. Text generation happens on the machine, not on OpenAI’s or anyone else’s servers.

But you may ask, is it really possible to run an LLM locally? Wouldn’t you need huge compute for it? There’s a key thing to note here: Training an LLM takes massive compute, but running/inferencing (using a trained model) can be done on a normal computer if optimized. Training = when you start with random weights and teach the model by showing it billions of examples. This needs huge GPU clusters, weeks of compute, and petabytes of data. Inference = once the weights are ready, you just load them and use them to make predictions. Still heavy math, but far less than training. This is what you do with Ollama. For ex, 7B model (quantized) needs 4–8 GB RAM. Easily runs on laptops (MacBook M1/M2, 16 GB RAM).

The idea of "self-adapting" malware is not a new idea. But the avenues to truly implement it are becoming real using AI. If a malware can adapt based on what it sees on the system or network, it can autonomously decide what to do next. This is like having a super intelligent hacker with access to that system who can exploit at lightning speed. THIS IS A GAME CHANGER. This is a shift in how cyber attackers operate.

And here’s the key challenge with this style of attacks: Every compromise looks different. On one machine, files are encrypted. On another, PII is exfiltrated. No patterns. No signatures. No predictability. For defenders, it's like fighting smoke with a sword.

As per the researchers, they found this file on Virus total as a proof-of-concept (not used in actual exploitation). But it doesn’t matter. Because once you have this kind of an offensive capability, it's only a matter of time it gets implemented. The only way to fight an adaptive enemy is with adaptive defense.